Most people think of GitHub Copilot as a code suggestion tool, something that helps autocomplete the line you're typing. But in this post, I’ll show you how I got Copilot to complete an entire Jira ticket, including reading from Figma designs, processing attachments, and writing working code, all from a two-line prompt.

The result? A production-ready feature, implemented in less than two minutes.

This wasn’t magic. It required three key ingredients:

- A

copilot-instructions.mdfile to teach Copilot how the codebase is structured. - A set of MCP servers that let Copilot read a Jira ticket, Figma designs, and attachments.

- A structured “magic prompt” that told Copilot how to fetch, reason, and implement.

In this article, I’ll walk you through the setup I used, how the pieces fit together, and how you can replicate the same workflow in your own team.

Layout Overview: What I Built

To demonstrate this workflow, I used a real app, a Jira ticket, and Figma design files to implement a small but complete feature: a User Details form inside a task management tool called TaskFlow.

TaskFlow App

TaskFlow is a multi-feature app that includes:

- Creating and managing tasks

- A Kanban-style task board

- Team and user management

-

Analytics and insights

This is a complex app, not something copilot would be able to meaningfully add features to without a bit of work.

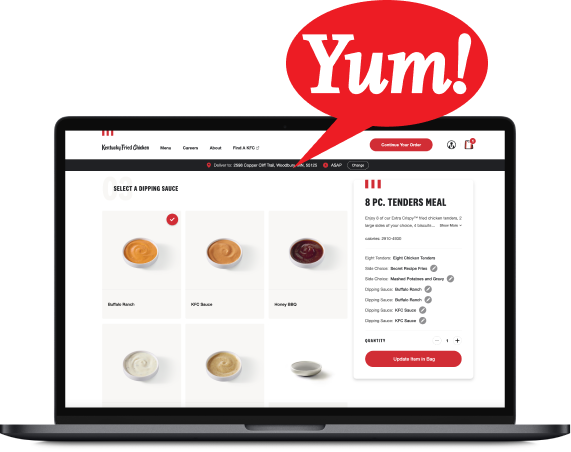

What I had copilot build was a User Details section, allowing users to enter their first and last name through a form. This new section was defined in a Figma file which outlined exactly what it should look like and it's different UI states:

The Jira Ticket

All the details for how the User Details section should look and act were stored in a Jira ticket. Tickets like this are where most features start. The ticket included:

- A written feature description

- A list of UI and functional requirements

- Several Figma links with design specs

- An image attachment to be included in the layout

Notice the multi-modal approach. Not only did the ticket include links to the figma designs above, it also included image attachments to be parsed by the AI implementing the ticket.

This setup means that in order for copilot to complete the ticket it had to somehow integrate with Jira, Figma and read images all while writing code that made sense in my already complex codebase.

Giving Copilot Context

Out of the box, GitHub Copilot is great at autocompleting code when you're already in the middle of writing something. But when you ask it to add a brand-new feature from scratch, it needs more than just the open file. It needs to understand how your codebase works, where things go, what technologies you're using, what patterns to follow.

That’s exactly what the .github/copilot-instructions.md file is for.

This is a special markdown file used by Copilot, and acts like a mini onboarding guide providing context. Think of it as the document you'd give to a new teammate on their first day.

With this file in place, Copilot doesn’t have to guess where to add things or how the codebase works. It can understand how to match your coding style, reuse existing components, and integrate changes cleanly into the project structure.

At Bitovi we've built a custom Copilot prompt chain which creates this file for you, check it out here

What Is MCP and Why Does It Matter?

To get GitHub Copilot to build a real feature, not just suggest lines of code, it needs more than what’s in your editor. It needs to understand the broader context: what the ticket says, what the designs look like, what files are attached, and how that all maps to your codebase.

That’s where Model Context Protocol, or MCP, comes in.

What is MCP?

MCP is a protocol that allows large language models to fetch and use external resources while generating responses. Instead of only seeing the current file in VS Code, Copilot can reach out to:

- Jira to read full ticket details

- Figma to pull design specs, UI states, and even generated HTML/CSS

- Other systems like Notion, Google Docs, GitHub issues, or internal tools

MCP essentially turns Copilot into an active participant, capable of looking things up, gathering information, and reasoning across different systems before writing any code.

How I Used It

To give Copilot access to everything it needed, I configured three MCP servers:

- Jira MCP server – Used to read the full ticket description and metadata

- Figma MCP server – Used to pull Dev Mode designs, code snippets, and UI states

- Bitovi’s custom Jira Offbridge server – Used to fetch attachments (like images) from the ticket (Jira's MCP doesn't support this at the time of writing).

The Figma and Atlassian (Jira) servers can be added directly from the VS Code MCP Server Gallery.

- Click “Install” from the gallery page

- VS Code opens and prompts you to add the server

- You can configure it globally or in a

.vscode/mcp.jsonfile inside your project

Figma's MCP server must be run locally from the app and requires "Dev Mode". You can turn it on in preferences, and the designs you want to pull from must be open in the "active" tab of the app.

Bitovi's custom server can be installed by following the instructions here.

Here’s a simplified example of what my configuration looked like:

The Magic Prompt That Runs It All

Once everything was set up — the copilot-instructions.md file in place and the MCP servers connected — I kicked off the entire process with just two lines:

1. Open the repository on GitHub: https://github.com/bitovi/ai-enablement-prompts.

2. Execute the prompt writing-code/generate-feature/generate-feature.md

{TICKET_NUMBER} = USER-10 (replace with your ticket id)

That’s it.

These two lines tell Copilot where to find a detailed, structured prompt we created at Bitovi — one that guides it step-by-step through reading a Jira ticket, gathering external context, and implementing the required feature in a way that actually fits the project.

The prompt itself lives inside the GitHub repo under:

writing-code/generate-feature/generate-feature.md

Here’s what it instructs Copilot to do.

Prompt Summary: What Copilot Does

Step 1: Retrieve the Jira Ticket - Use the Atlassian MCP server to fetch ticket metadata, including description, links, and fields.

Step 2: Parse the Ticket Content- Scan for Figma URLs and any mention of file attachments.

Step 3: Gather Supplementary Context - If Figma links are found, use the Figma MCP server to retrieve component code, screenshots and design annotations. If attachments are referenced, use Bitovi's custom MCP server to fetch them.

Step 4: Synthesize the Information - Organize everything into a structured mental model of the feature and match attachments to relevant parts of the ticket content.

Step 5: Implement the Feature - Follow the architecture and conventions defined in copilot-instructions.md. Match the UI exactly to the Figma design, styling, structure, spacing. Implement only what’s described, no assumptions, no extras

This prompt doesn’t just say “build a form.” It gives Copilot the context, access, and discipline to implement something as if a senior engineer was doing it — which is exactly the goal.

It knows where to look, what to extract, and how to generate code that fits naturally into the codebase — because we told it how.

Wrapping Up

What started as a typical Jira ticket, a form, a few design links, and an image attachment, turned into a complete feature, implemented in less than two minutes by GitHub Copilot.

But this wasn’t out-of-the-box behavior. It worked because I gave Copilot the right environment:

- A

copilot-instructions.mdfile so it understood the architecture and conventions of the codebase - MCP servers that let it read from Jira, parse Figma designs, and access ticket attachments

- A structured, opinionated prompt that walked it through the process like an experienced engineer

Together, those pieces turned Copilot into more than just a code assistant. It became a full-feature contributor — able to interpret requirements, synthesize design and ticket context, and write production-ready code that fit right into a real-world app.

This workflow has fundamentally changed how I think about building features, and it’s something we’re helping other teams adopt as well.

If you’re curious about how to bring this kind of automation into your own engineering workflow — or if you want help setting up your codebase for AI-driven development — feel free to reach out to Bitovi. We’d be happy to help you get started.