When you provision and configure the infrastructure and software that forms an application, you’ll end up with data that needs to be securely stored for later use. This data can range from the default user password to an authentication token to an SSL certificates master key.

You’ll need a way to both securely store the information, and allow authorized persons to access it. If you’re using AWS already, AWS Secrets Manager is the best tool for storing and retrieving your data.

Getting Started with AWS Secrets Manager

Before you start entering data into Secrets Manager (SM), be sure to think about a naming convention for the secrets you store. The item name is the basis of your security policies - for example, when you create the IAM roles to allow access, you can add the resources as all (*) or restrict them based upon the name.

Here’s an example of a restriction to only those secrets that start with dev-.

arn:aws:secretsmanager:us-west-2:111122223333:secret:dev-*

There is no one right way to name your objects, but if you don’t start with a naming convention you can easily communicate to others, you’ll have a mess when you try and add security. Before you start, think about what you will be storing, group that data into who would need access to it, and choose your naming conventions based on those groupings.

Storing Data in AWS Secrets Manager

There are a few methods to get data into Secrets Manager.

From a cmd line

Start with the command line. I’m going to assume that you already have the AWS command line installed.

This creates a secret called account-player1 with a username and password.

aws secretsmanager create-secret --name account-player1 --secret-string '{"username":"Player1","password":"Password1"}'

Note how I put this in a JSON format. By using key-value pairs in our data, you can add anything and then parse it out. Store your database account info with all the connection information so your application can retrieve that data with the username password.

aws secretsmanager create-secret --name db-prod-staffing --secret-string '{"username":"dbuser","password":"Password1","host:proddb.aws.com","port:3306"}'

The data doesn’t have to be JSON on the command line. Anything can be a string, even an entire file.

aws secretsmanager create-secret --name private-key --secret-string file://key.pem

In Terraform

Some objects need to have a username/password to be created in Terraform. Since you don’t want to have passwords stored in code, you can create a random password and store it in the secret store.

Here I’ll update the secret with a name defined in local.accountName with the value from local.username and a random password.

resource "random_password" "pwd" { length = 16 special = true override_special = "_%@"}resource "aws_secretsmanager_secret_version" "version" { secret_id = local.accountName secret_string = <<EOF { "username": "${local.username}", "password": "${random_password.pwd.result}" }EOF}

Remember: secrets can’t be deleted immediately. They will persist from 7-30 days, with the default being 30. If you perform a Terraform Destroy, then the secret will be destroyed but will block you from re-creating a new account with the same name for 30 days.

In the GUI

Sometimes you’ll just want to use Click-Ops, so let’s talk through that process.

Go to Secrets Manager and select New Secret, where you’ll be presented with several options. Enter the username and password for the Amazon resources and then select the resource. The other database option will prompt for DB information such as host and port.

.png?width=858&name=Screen%20Shot%202022-04-11%20at%2010.38.59%20AM%20(1).png)

You can also select Plaintext instead of Key/Value and then put the secret.

.png?width=391&name=Screen%20Shot%202022-04-11%20at%2010.39.22%20AM%20(1).png)

.png?width=260&name=Screen%20Shot%202022-04-11%20at%2010.39.38%20AM%20(1).png)

Select Next, enter the secret name and optional description and click Next again.

On the last screen, we can enable automatic rotation of the secret. We can set the schedule and then select the Lambda function that will perform the rotation. You will need to create the Lambda function yourself.

Click Next to review the settings. This is where you can view the Sample code that can be used to retrieve the secret directly from your application.

Click Store to save the secret. You'll need to refresh the screen to see the secret listed.

Retrieve from AWS Secrets Manager

From the GUI

From the GUI, you can select your secret to see its information. Select Retrieve secret value in the Secret value box to see the data.

.png?width=1077&name=Screen%20Shot%202022-04-11%20at%2011.24.08%20AM%20(1).png)

Source Code

Scroll down to see the sample code needed to retrieve the secret.

.png?width=934&name=Screen%20Shot%202022-04-11%20at%2011.28.28%20AM%20(1).png)

GitHub Pipeline

So why retrieve secrets from Secrets Manager when GitHub already stores secrets that are readily accessible? It all comes down to a single point of truth. We know that we have the latest set of credentials by pulling the credentials out of Secrets Manager at run time.

But how do we get the secrets from Secrets Manager into our pipeline? Bitovi has a fantastic GitHub action that can retrieve the secrets and set them us as environmental variables for use in your pipeline.

Below is a sample workflow that uses the Bitovi GitHub Action to parse out AWS secrets into environmental variables. It’s used in conjunction with the aws-action to configure the AWS credentials.

Since my secret is JSON, I set parse-json to true so the key would be appended to the secret name to become the variable name, containing the value.

name: Demo AWS Secretson: workflow_dispatch: {}env: secret: account-player1 aws-region: us-east-1jobs: demo: runs-on: ubuntu-latest steps: - name: Configure AWS Credentials uses: aws-actions/configure-aws-credentials@v1 with: aws-access-key-id: $ aws-secret-access-key: $ aws-region: $0 - name: Read secret from AWS SM into environment variables uses: bitovi/github-actions-aws-secrets-manager@v2.0.0 with: secrets: | $ parse-json: true - run: | echo The user is: $ echo The password is: $

Since my the name of the secret is not POSIX compliant, I get the following warning.

-png.png)

The script replaced my dashes with underscores. This is to be expected and why it's only a warning.

Looking at the result of the run step, we can see that GitHub blanks the values of the secrets in the log, even the username, and they don’t show up in the echo statements. This is by design.

-png.png)

I’m using this as part of a database migration. The values for the database host and the port are also passed so with just a few lines of code, everything is already in variables. And since I have a single source of truth in AWS, I only have to update once to update everything.

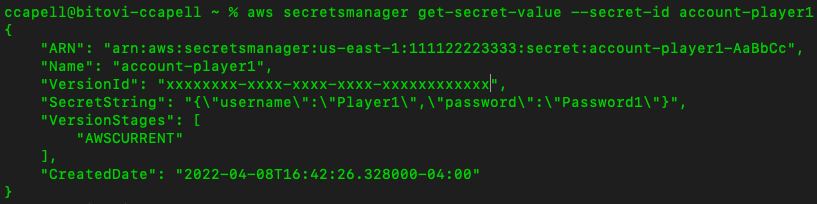

From a Command Line

You can also retrieve the values via a command line.

aws secretsmanager get-secret-value --secret-id account-player1

External Secrets Operator

If you’re working with Kubernetes, the External Secrets Operator can take the secrets from AWS Secrets Manager and then sync them into a Kubernetes secret. By deploying a Kubernetes object that only contains the secret name and destination, we can securely get secrets into our cluster. As a bonus, when we update the values in AWS Secrets Manager, the secret in Kubernetes is automatically updated.

Give AWS Secrets Manager a Try

If you’re using AWS, I’d recommend taking a few minutes to see if AWS Secrets Manager is right for you. I’ve shown several ways to import and export the secrets, and it can all be done in a controlled fashion.

Need assistance?

Bitovi has a team of experienced DevOps engineers that can work with your team to design and implement a solution that's right for you.

Previous Post